Building trust through transparent AI governance

To lead responsibly in AI, UAE organisations must move beyond opaque systems and embrace transparency, accountability and human oversight. This article outlines how businesses can strengthen stakeholder trust, reduce regulatory risk and align with the UAE National Strategy for Artificial Intelligence 2031 by adopting a ‘glass box’ governance approach.

Key advantages include:

- Clear alignment with national AI priorities and regulatory expectations

- Stronger institutional trust through transparent, explainable AI (XAI)

- Reduced risk of bias, reputational damage and strategic failure

- Future-proofed governance against emerging threats, including quantum risks

- Practical oversight through continuous monitoring and human accountability

The UAE National Strategy for Artificial Intelligence 2031 is more than a policy document. It is a bold vision to make the nation a global leader in AI, creating new economic opportunities and enhancing the lives of organisations and individuals. For the banks and government agencies involved in this change, the journey is not just about using powerful technology; it is about building and sustaining trust.

However, we need to be honest: the main barrier to that trust is the "black box" problem. We all know it: an AI makes an important decision, but we cannot explain how or why it did so. Simply talking about "trustworthy AI" is not enough to solve this issue. To truly support the nation’s strategy, organisations must create a solid framework for governance and accountability.

A real-world scenario: An AI decision under scrutiny

Imagine a large regional bank that has introduced an advanced AI model to automate its loan and credit approval process. It is lucrative, efficient, and swift. But when it comes to a routine audit, the Central Bank of the UAE (CBUAE)* asks for a detailed explanation for a series of loan rejections that seem to impact a particular demographic disproportionately.

Suddenly, there is a problem. The bank's data science team can check the model's accuracy, but they cannot properly interpret why it came to those specific decisions—for example, rejections or approvals. The model is based on third-party packages and libraries, so the AI logic is a black box.

The situation creates a lack of transparency that generates immediate and significant risks:

- Regulatory non-compliance: Unfairness can attract harsh penalties.

- Reputational damage: Mere rumours of algorithmic bias damage public and investor trust.

- Strategic failure: The AI initiative, meant as an innovative quantum leap, becomes a liability, undermining the bank's alignment with the national vision.

This episode demonstrates a single plain truth: for AI to work in our world, accuracy is not enough. Accountability is necessary.

From black box to glass box

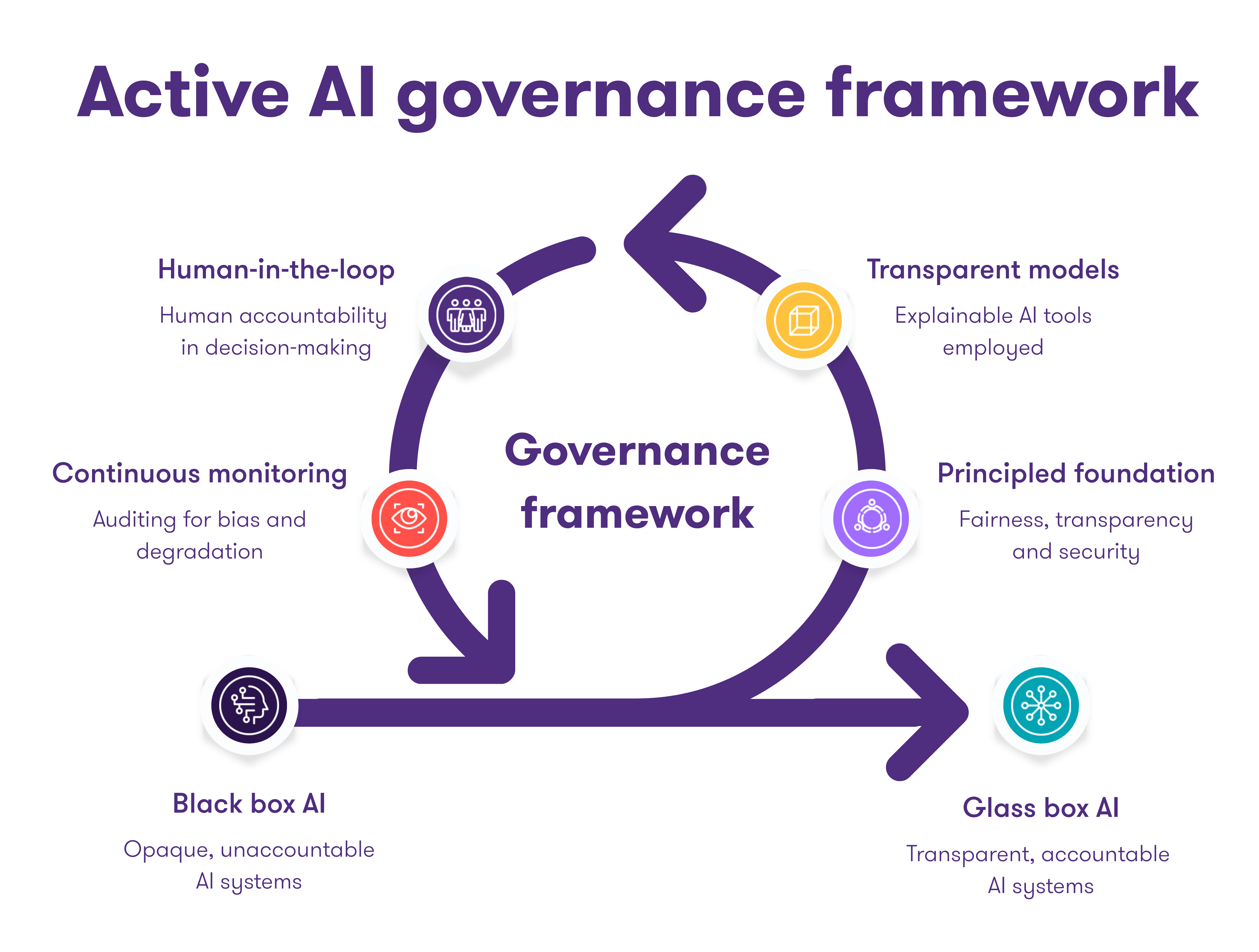

So how do we do better than this? We must break out of passive trusting of AI and move to an active governance system. This is a matter of incorporating human accountability into the entire AI lifecycle. The "human-in-the-loop" can't be a passive observer; they must be an active governor, responsible for ensuring the fairness of the system.

We can envision this as an organised system of governance, aiming to turn that black box into an open "glass box" (see Figure 1).

By implementing this system of governance, organisations can move from passive reliance on AI to active, responsible stewardship and to building trust with regulators, customers, and the wider community.

Figure 1: Structured governance framework to go from a “black box” to a “glass box”

Active AI governance framework

It starts here. A foundation built on the pillars of the UAE AI Charter: fairness, transparency, and security. That is to say, a scrupulous, honest analysis of the data on which the AI is trained.

If historical data are infused with societal biases, the AI will learn and reinforce them. Real governance entails actively removing such prejudice before writing one line of code.

Instead of always opting for the most advanced model, a regulator-led approach emphasises transparency. This could include either using inherently transparent models (e.g., decision trees) or "Explainable AI" (XAI) tools like LIME and SHAP.

Think of these tools as interpreters that reveal how an AI made a specific decision, traceable to regulators and explainable to clients.

This is the basis. A human—a responsible human—who is not a machine must be the ultimate decision-maker in key situations. This person, or team, has a precise mandate:

- Interrogate: To ask the tough questions and question the AI's recommendations.

- Override: To stop an AI's recommendation if it does not sit well or is against ethical standards.

- Explain: To communicate the ultimate decision to stakeholders and bear full responsibility.

An AI model is not a "set it and forget it" product. Its accuracy may shift with new information. A robust governance system includes continuous monitoring to catch decline in performance or new types of bias.

Routine audits, based on standards like ISO 42001 and the NIST AI Risk Management Framework, keep the system from becoming biased or non-compliant.

Future-proofing governance: The quantum risk

And just when you thought you knew, we may still have to look ahead to the future. Any good model for governance must consider new threats in the future. Where AI meets quantum computing, a whole new frontier of threat is possible. Strong as AI models are, the enormous power of quantum computers could one day render our strongest cryptographic defences obsolete.

The real nightmare is less about data stolen. It's about an evil actor using quantum might to subtly poison an AI model, introducing invisible biases or backdoors. This is the silent corruption of the very intelligence that drives life-and-death decisions. Therefore, a forward-looking AI strategy must include readiness for a post-quantum world, ensuring our "glass box" is not just open, but quantum-proof.

3 practical steps for UAE organisations

Aligning with Vision 2031: A strategic imperative

Enacting a robust AI governance framework is not just a risk aversion exercise; it is a simple and effective way in which an organisation can align itself with the UAE's National AI Strategy 2031. It makes an organisation more than just a consumer of AI but rather a leader in its responsible implementation.

This approach builds the institutional trust necessary to innovate, de-risks AI investment, and equips the UAE's leading enterprises to build high-quality, ethical AI. By opening the black box to a glass box, we build systems that aren't just smart, but also wise, fair, and accountable—the true foundation of the UAE's AI-powered future.

Engage our team

-

Combine local insight with global best practices to build robust, future-ready AI governance for your organisation.

-

Receive tailored assessments and practical recommendations that help you meet regulatory expectations and lead in ethical AI adoption.

-

Ready to set the standard for responsible AI in the UAE? Connect with our team today and turn trust into your competitive edge.